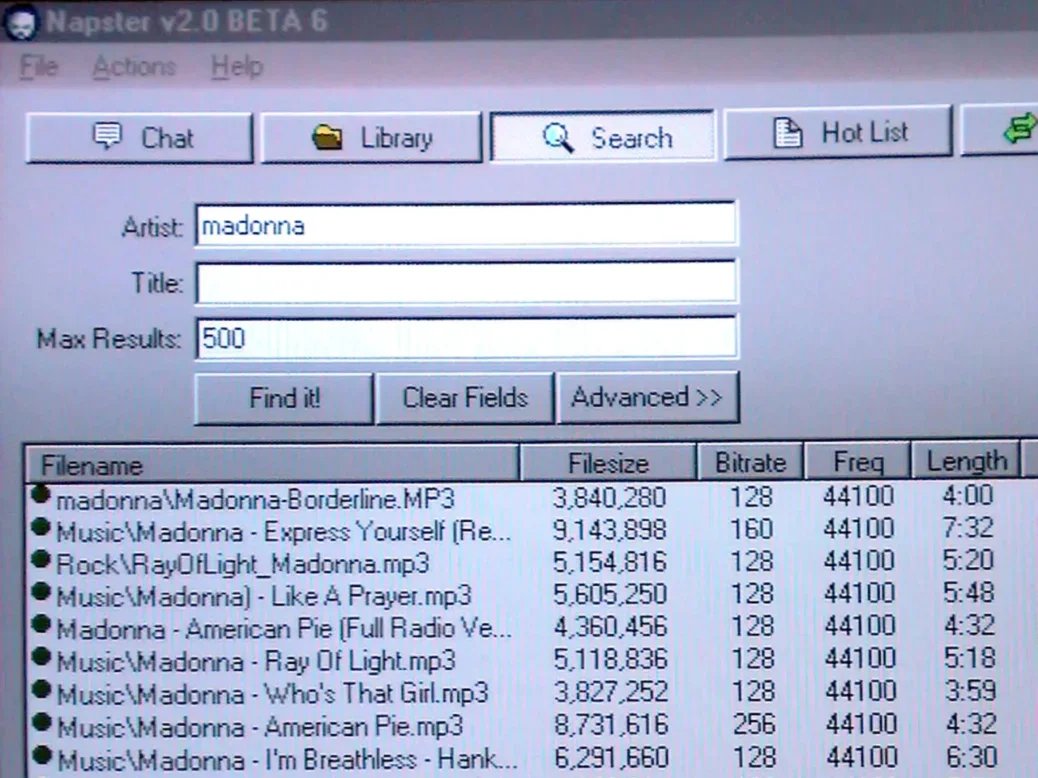

Remember Napster? The early 2000s rebel that let us all download music for free and turned the music industry on its head? Well, generative AI might just be today’s Napster for publishers – exciting, disruptive and causing a bit of panic all at once.

What can the news industry learn from recent history? There are some similarities with the Napster era. Back then, the music industry didn’t know what hit it. Record labels were losing money, artists were frustrated, and listeners were caught in the middle. It took years, lawsuits and an all-new platform called Spotify to find a balance.

The same thing could happen here – chaos first, solutions later. But this time, publishers should actively take steps to mitigate against the threat of big tech taking the lion’s share of the spoils.

Let’s take a look at two key questions: What new revenue opportunities could Generative AI offer publishers? And what role could content marketplaces play in this?

Six ways to protect publisher content from AI scrapers

Here are the actions publishers can – and should – take to protect their content from armies of content scraping bots. (This was one of the topics that I discussed with Dominic Ponsford in last weeks’ podcast)

1) Terms of service

Make sure that your website’s terms of service explicitly state that you do not allow scraping or the use of any content for training AI. While this won’t stop all bots, it provides a legal foundation for pursuing action against offenders.

2) Robots.txt and meta tags

While these files can’t prevent access, they serve as a signal to well-behaved bots about which parts of your site to avoid. There are quite a few tools out there like Cloudflare, DarkVisiors and Netecea that can automatically update and manage your Robots.txt, and track the bots visiting your site.

3) Copyright notices

Ensure that all your website and content is marked with copyright notices. This reinforces your ownership of the content and can be useful in legal disputes.

4) Set ‘honey pot traps’

A personal favourite – a honey pot is a hidden field on your website that users cannot see or interact with, but bots might attempt to fill out. If they do, it flags to you that a bot is at work, allowing you to take mitigating actions (e.g. blocking its IP address).

5)Watermarking

Watermarking images and video can deter unauthorised use. Even if the content is scraped, the watermark may remain, making it clear where the content originated.

6) CAPTCHA and reCAPTCHA

These tools are designed to prevent bots from accessing content, by requiring users to complete a test that’s easy for humans but difficult for machines. Implementing CAPTCHA on key pages – like login forms or comment sections – can be effective in deterring bots.

These steps won’t provide an impermeable defence against bad bots, but it’s good to have the fundamental protections in place.

Now content secured: How to make money from AI

Now to the positives: what new revenue opportunities could gen AI offer publishers?

In a word – licensing.

Now is the time for premium publishers to lean into the opportunities to extract any B2B value that lies dormant in their premium content archive by licensing it – on their terms – to gen AI models.

The size and scale of the licensing revenue opportunity will be highly variable, so a good starting point is to invest in adding detailed and structured metadata to any content in your archive that isn’t properly tagged. This enhances the usability of content, and allows AI developers to accurately index and rank its value.

But that still leaves a challenge; how can publishers trade copyrighted content with AI developers at a fair value? And how to go to market with it? Not all publishers can simply open conversations with the large frontier LLM companies.

Good news – a handful of marketplaces are beginning to become established which are aiming to bring together content rights owners and responsible AI developers who want to acquire copyrighted content to power their LLM’s

The proposition could be appealing to publishers – platforms that allow them to licence sections of their content in a safe, controlled, closed ecosystem where AI developers can responsibly acquire rights to content.

Human Native Ai is one of the first such platforms available to UK publishers. Set up by James Smith and Jack Galilee last year, the platform allows rights owners to upload and catalogue their content and gives them complete control over which individual pieces of content are open or closed to AI training.

Monetisation is also flexible; publishers can licence their content or data for AI training on a subscription or revenue share basis. Human Native also offer a service where they can help publishers prepare their content or data so it is correctly tagged for AI models.

In my opinion, this type of platform potentially offers publishers a truly incremental revenue stream. Publishers can partner with these emerging marketplaces to safely monetise their archives in controlled environments, ensuring fair value and intellectual property protection.

Read more of my practical tips for publishers on generative AI here.

Email pged@pressgazette.co.uk to point out mistakes, provide story tips or send in a letter for publication on our "Letters Page" blog