Leaders in newsroom use of generative artificial intelligence shared tips, tools and some optimistic predictions before the holidays at the NCTJ‘s Artificial Intelligence in Journalism event.

To kick off the new year Press Gazette has rounded up the most useful lessons shared – as well as some opinions on what’s around the corner.

Read on for more on how Reuters has addressed the risk of AI data leakage, how to build your own AI copilot and why one Sun staffer thinks investment in generative AI should pay off for newsrooms “imminently”.

Reuters experiments with ChatGPT while keeping it inside ‘a good strong fence’

Several publishers have previously expressed caution at plugging their copy into ChatGPT. The Telegraph for example banned staff from using the tool for copy editing, warning that data leakage could breach both the publisher’s copyright and data protection laws.

Jane Barrett, the global editor of media news strategy at Reuters, told the event that the news agency has tried to address this by putting “a good strong fence around” the generative AI experimentation it has done.

“We have our own instance of ChatGPT through Microsoft Azure that therefore allows us to be able to play safely,” she said.

“And I think that’s kind of a big thing – experiment, but experiment responsibly. Play, but play safely. That was the first big meta thing that we learned.”

“Play” was a theme of Barrett’s conversation with Sky News presenter Vanessa Baffoe. She said: “Everyone should be just doing it to play. Find your inner child, get on some of these tools…

“I said this at a conference a while ago, and somebody added a really important thing, which I’m so stealing: play and ask it about stuff that you know a lot about. Because then you understand its limitations and you’ll understand where it goes wrong.”

One limit she noted that was particularly relevant for journalists was ChatGPT’s understanding of quotes: “AI doesn’t know what a quote is… If you’re summarising a story, you want to keep the quote separate – you don’t want to summarise the quote as if it’s part of the story… We’re now working with our data scientists to work out – how can we teach the machine what a quote actually is and to leave them alone?”

Newsquest’s head of editorial AI builds journalist AI assistant live on stage

Jody Doherty-Smith, head of editorial AI at Newsquest, said that because generative AI tools will improve and change at a fast rate, “the longest-lasting skill is knowing how to speak with AI”.

During the talk he revealed that in six months Newsquest had gone from hiring its first AI-assisted reporter to employing seven across the country.

[Read more: How Newsquest and its seven AI-assisted reporters are using ChatGPT]

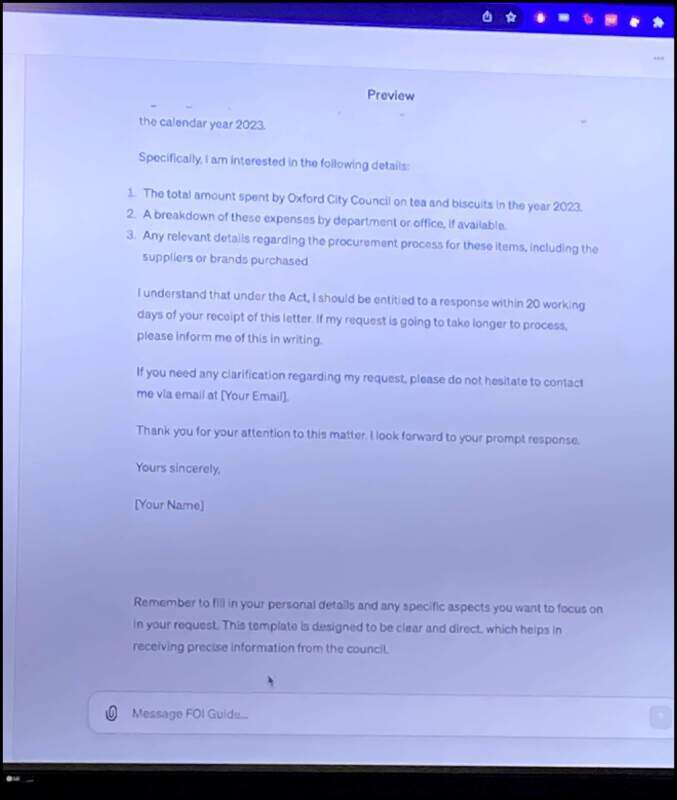

![A slide from a presentation given by Newsquest head of editorial AI Jody Doherty-Cove. It says "AI agents will change everything", before saying across a bulleted list that "digital assistants can undertake a range of tasks from in-depth research to data analysis and preliminary reporting"; that "journalists can delve deeper, using traditional human intelligence to craft narratives"; that it isn't "just about delegating tasks, it's about supercharging your abilities" and that "although it's built using GPT, it goes much further than ChatGPT. Once building [AI agents] will keep serving their purpose." A picture of a cute robot is then marked with arrows suggesting that an AI agent has three components: its knowledge base, the actions it has been made capable of doing, and its purpose - i.e. the work a journalist needs it to complete. Picture: Press Gazette](https://pressgazette.co.uk/wp-content/uploads/sites/7/2023/12/NewEuropeanMichelleMoneSue2-800x600.jpg)

Doherty-Smith said journalists would benefit most from AI by identifying the specific problems they need solved and setting up specialised “agents” – customised AI models – that address them.

Generative AI chatbots can alter their output depending on prompts given to them by a user. Doherty-Smith showed attendees a table showing the sorts of prompts that can be given to an AI, including requests to restructure or reformat given information, to fetch information from elsewhere or to brainstorm more information on a given topic.

He then attempted live on stage to use ChatGPT’s GPT Builder feature to create an internet-linked agent that could write and send freedom of information requests. After entering a short series of prompts he succeeded in creating an agent which could format questions from the user into a full FOI request and which could fetch the FOI email addresses from local council websites.

He did not go through the more technically complicated task of giving it the ability to send an email, but said that it was both possible and had already been done by Newsquest reporters.

He said that “one of the things, actually, when we were playing around with it and first linked it up with actions [the ability to interact with the web] - it sent one [an FOI request] without being told”. Consequently he added that it was important to tell the agent to present any email drafts to the reporter before sending.

“Remember that [while] experimenting is really fun, publishing is very serious,” he said. “So make sure that you have safeguards in place. We've got a code of conduct across Newsquest… to make sure that everything we publish is correct.”

But he concluded: “AI agents are here. so move quickly and get your own.”

Bullish predictions for generative AI and journalism in the near term

While news industry predictions on the effects of artificial intelligence often emphasise the risks ahead, there were some notably optimistic comments at the NCTJ event.

For example, asked by Thomson Reuters interim managing editor for video and photography Joanna Webster when news outlets could expect to see “a return on this investment” in AI, The Sun’s Nadine Forshaw said it should be “imminently”.

“It really depends on what your proof of concepts are and what you’re doing,” she said.

“If you’re playing with it for fun, great - [but] maybe it won’t pay off straight away. If you're looking at things that are really gonna save journalists time, that are going to allow you to repurpose content, whatever it might be - I think as long as what you're building is tailored for the issues that you have in your news building, there is no reason payoff shouldn’t be fairly immediate.”

Similarly bullishly, Barrett said that at Reuters “we fully foresee a time when there will be some stories that can go out without a human overseeing every single story. But at that stage, we must make it very, very, very clear that this story has been done by AI.

“At present we put out some translations - only to our financial clients, I’ll say, because they just wanted more volume [and] they weren't gonna pay us for the people to translate things. So we are trialling some automated translations which are not looked at by a human before they go out, but it says across the top in big letters: ‘This was translated by a machine.’”

Barrett added that Reuters already employs staff whose full-time job is to spot-check “a proportion of those every single day”.

Manjiri Kulkarni Carey, the editor of the BBC’s innovation arm BBC News Labs, said the adoption of generative AI in newsrooms will change which skills publishers seek out from staff.

“I consider myself a kind of ‘bridge’ producer, because I can speak to software engineers, but I'm also a journalist - and there are just not enough people like this.

“The students that are coming through, they're going to need to do this more and more… If you can do those core skills, you can do anything. But the more skills you have, the more you can do these kind of bridging roles, the better.

“Because newsrooms are only going to evolve in that direction… they are going to be going: ‘how are we going to write a programme that will interrogate this 15,000 pages of data to get five stories out there?’

“You need to be able to distil and interpret and work with product people, engineering, all these technology people to get those stories.”

Gavin Allen, a digital and journalism lecturer at Cardiff University's School of Journalism, Media and Culture, noted on that theme that his course had begun teaching students how to use AI prompt engineering for their journalism.

More broadly, he said: “AI is, perhaps, an opportunity to make some money in this industry.”

Bonus: click through for the Google News Initiative's list of journalism and AI case studies.

Email pged@pressgazette.co.uk to point out mistakes, provide story tips or send in a letter for publication on our "Letters Page" blog