The rise of generative AI in journalism has provided a powerful tool for creating news content but also raises complex ethical questions around transparency, bias, and the role of human journalists.

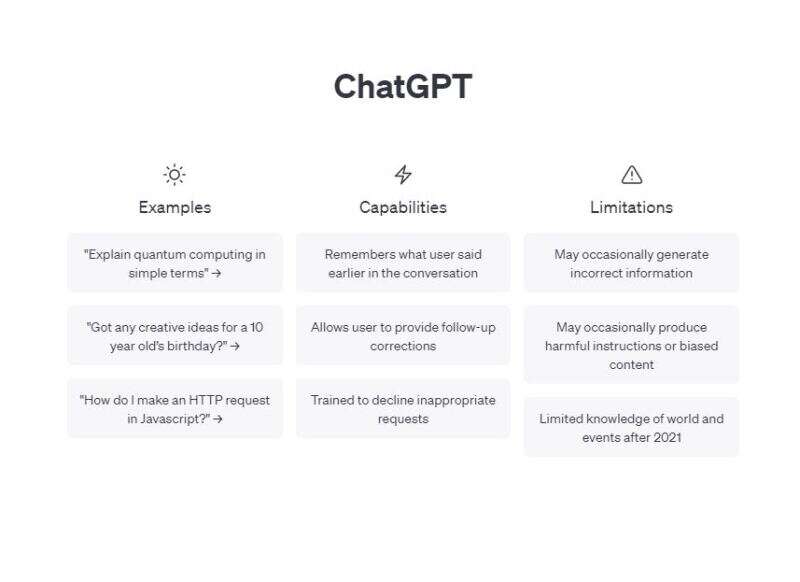

I will immediately come clean: The above sentence was written by AI chatbot ChatGPT, when asked for a one-sentence introduction to this article about the ethics of using such tools in journalism.

Does this mean I should give the chatbot a joint byline? Or perhaps I should not use a sentence generated by the bot in my writing at all?

Professor Charlie Beckett, director of the Journalism AI project at LSE which produced a major report on this topic in 2019, told Press Gazette trust, accuracy, accountability and bias continue to be the major ethical issues around AI despite new issues raised by the advent of generative AI such as OpenAI’s ChatGPT and image generator DALL-E, and Google’s Bard. The conversation has rapidly evolved over the past six months as these new chatbots are so much more user-friendly than previous versions, increasing the possibilities of using them on a day-to-day basis.

Beckett said the general consensus appears to be that newsrooms are “quite rightly” exploring the new generative AI technologies before they make big decisions about how they might or might not use them.

“I think that’s important that you don’t just suddenly say ‘okay, we’re going to ban using generative AI in our newsroom’ or ‘immediately from today we’re going to tell our readers everything’. I think it is good to pause and reflect.”

In some ways, he said, media organisations “don’t have to change anything… depending on what you’re doing with it. In some senses, you just have to stick to your values. So whatever your values were before, keep with them. If you don’t have any then good luck, but if you do have them then obviously it’s just a question of thinking practically about how it applies in this case”.

Several news organisations have already published guidelines on their use of AI tools for the production of content, while others are currently at the stage of running taskforces in part to decide what rules need to be set.

In March tech magazine brand Wired was one of the first to publish the ground rules it set for its journalists on using generative AI, saying it wants “to be on the front lines of new technology, but also to be ethical and appropriately circumspect”.

It came shortly after tech news rival CNET was criticised for publishing AI-generated content (some of which was false) under a generic staff byline that did not make the production process clear. It has now updated its processes to be more transparent, although Beckett suggested publishers may not always need to tell their audiences when AI has assisted in creating a story.

He said news organisations should consider it in terms of what they currently do: for example, if they tell readers when copy comes directly from the PA wire, or if they make clear when they follow-up a story from another outlet. Alternatively, they may want to tell readers they “used a clever AI programme to do this as a way of showing off” or as part of the storytelling on a big investigation.

But he added: “You certainly shouldn’t deceive the user. You shouldn’t say this is written by Charlotte Tobitt and in fact it was written by an algorithm. If you’re going to tell people be honest. Don’t say with AI assistance or something, just say, yeah, this is automated news, we review it, make sure the standards are there, but this is automated… I don’t want to sound complacent at all, but I think it’s largely common sense.”

Wired told readers it has decided not to publish stories with text generated or edited by AI, whether it creates the full story or just parts, “except when the fact that it’s AI-generated is the whole point of the story”. It said risks of the current AI tools available include the introduction of errors or bias, boring writing, and plagiarism. Therefore it decided: “If a writer uses it to create text for publication without a disclosure, we’ll treat that as tantamount to plagiarism.”

Wired journalists may, however, experiment with using AI to suggest headlines, text for social media posts, story ideas or help with research, but this will be acknowledged where appropriate and never lead to a final product without human intervention.

Heidi News, a French-language Swiss online media brand focusing on science and health, published its own “ethical charter” on AI earlier this month. Its editorial team said they did not want to ignore the potential uses of the technological advances but that they needed to maintain “the framework and ethics that govern our activity, and above all the relationship of trust with our readers”.

They therefore decided: “Editorial staff can use AI to facilitate or improve their work, but human intelligence remains at the heart of all our editorial production. No content will be published without prior human supervision.” To provide transparency to readers, they said every article will continue to be “signed” by at least one journalist “who remain guarantors of the veracity and relevance of the information it contains”.

Dutch news agency ANP has its own guidelines, in which it similarly said AI tools could be used to provide inspiration to its journalists but that it would not use any AI-produced information without human verification.

And German news agency DPA published guidelines specifically addressing the ethical implications and need for transparency: “DPA only uses legitimate AI that complies with applicable law and statutory provisions and meets our ethical principles, such as human autonomy, fairness and democratic values.

“DPA uses AI that is technically robust and secure to minimise the risk of errors and misuse. Where content is generated exclusively by AI, we make this transparent and explainable. A person is always responsible for all content generated with AI.”

Beckett said DPA’s five-point guidelines gave a good “basic approach you should take” while he also praised older AI guidance from Bavarian broadcaster Bayerischer Rundfunk in 2020 that he described as “very good general guidance“.

Part of the guidance reads: “We make plain for our users what technologies we use, what data we process and which editorial teams or partners are responsible for it. When we encounter ethical challenges in our research and development, we make them a topic in order to raise awareness for such problems and make our own learning process transparent.”

Other news organisations are working behind the scenes to get themselves to a point where they are ready to make such a public statement of intent.

The BBC has been hiring into its responsible data and AI team, whose job it is to build “a culture where everyone at the BBC is empowered to use data and AI/ML [machine learning] responsibly. For the past 100 years the BBC has been defined by its public service values – things like trust, impartiality, universality, diversity and creativity. We ensure that our use of data and AI/ML is aligned with those public service values and also our legal and regulatory obligations.”

The job advert went on to say the BBC is developing a “pan-BBC governance framework” to be implemented on an “ambitious timescale”. It comes as BBC News chief executive Deborah Turness warned the impact AI was already having on the spread of disinformation was “nothing short of frightening”.

After discovering that ChatGPT had cited to members of the public at least two supposed articles by its journalists that turned out to be fake, The Guardian has created a “working group and small engineering team to focus on learning about the technology, considering the public policy and IP questions around it, listening to academics and practitioners, talking to other organisations, consulting and training our staff, and exploring safely and responsibly how the technology performs when applied to journalistic use”.

The Guardian’s head of editorial innovation Chris Moran has said the title will publish a “clear and concise explanation of how we plan to employ generative AI” in the coming weeks, ensuring it maintains the “highest journalistic standards” and stays accountable to the audience.

And Insider’s editor-in-chief Nicholas Carlson wrote to staff last week in a memo published by Semafor reporter Max Tani. Carlson said Insider journalists should not use any generative AI tools to write any sentences for publication – but this may change in the future after a pilot group of experienced editorial staff have carried out some experimentation.

Carlson gave three initial warnings about generative AI for those in the pilot group: that it can create falsehoods, that it plagiarises by presenting other people’s text as its own, and that its text can be “dull and generic”. “Make sure you stand by and are proud of what you file,” he wrote.

A lot of focus has gone on the potential pitfalls of AI-written content. But Beckett pointed out: “Journalism has always been fallible. It’s often done in a rush and often journalism has to be updated and corrected, and more context given… So I think before we start getting too pious, we have to check our own human flaws.”

Another risk is of introducing bias or discrimination into a story, but Beckett said this was common to any kind of AI work. He has noticed “bigger ethical problems” within other industries, he said, for example a discriminatory algorithm for allocating teacher contracts in Italy that resulted in people unfairly being out of work.

Beckett added: “I don’t think there’s anything particularly to be frightened of. I mean, there’s plenty of risks inherent in the technology, like there is with lots of technology, but as long as you’re cautious then you can avoid or minimise many of those risks.

“And journalists are supposed to be good at this. We’re supposed to be edited. We’re supposed to be people who check for accuracy and we double check facts and we are critical about statements that are made. We say, well, are they true? We’re suspicious of dodgy narratives, and we’re supposed to edit each other. You’re supposed to have bosses who look at your copy and you’re supposed to correct things if they’re not accurate. This is what we’re supposed to do as journalists.”

Back to my interaction with ChatGPT. It itself appears very keen to mention “ethical standards of journalism” in discussions of this topic, using that phrase in multiple responses to my questions.

It warned that using any of its writing without telling readers would be “misleading… and could potentially compromise the ethical standards of journalism”. And if I disclose it? Yes, but “it is important to consider the limitations of using AI-generated content, as it may not always accurately reflect the nuances and complexities of human experience or the specific context of a particular news event.

“It is also important to ensure that any AI-generated content used in your news story is factually accurate, free from biases and is in compliance with the ethical standards of journalism.”

Email pged@pressgazette.co.uk to point out mistakes, provide story tips or send in a letter for publication on our "Letters Page" blog